Troubleshooting AI Assistant

When using the AI Assistant in Bullhorn, you may occasionally encounter error messages that prevent prompts from running smoothly.

This guide outlines the most common error messages you might see, what they mean, and the steps you or your administrator can take to resolve them.

If you see an error not covered here, please contact Bullhorn Support for help troubleshooting.

AI Assistant is Missing in Bullhorn

If the AI Assistant isn’t visible, it’s often related to permissions, configuration, or feature availability. For step-by-step guidance, see Why Can’t I See the AI Assistant in Bullhorn?.

Error Messages When Using AI Assistant

You may encounter the following errors in the AI Assistant slideout when running prompts:

Issue With Request / Response Timed Out / Endpoint Could Not Be Reached

Error messages include:

-

“There was an issue with your request. Please try again later.”

-

“The response from the LLM

An LLM, or Large Language Model, is a deep learning model that has been pre-trained on vast amounts of data and is used to power generative AI. has timed out. Please try again.”

An LLM, or Large Language Model, is a deep learning model that has been pre-trained on vast amounts of data and is used to power generative AI. has timed out. Please try again.” -

“The LLM endpoint could not be reached. Please try again.”

What it means:

These errors usually occur when the LLM provider times out or is temporarily unavailable.

What to do:

Try again later or check your LLM provider’s status page.

-

Bullhorn LLM: GPT-4.1 nano Status OR OpenAI Status

-

OpenAI: OpenAI Status

-

Azure: Visit the Azure Status Page, press Ctrl + F, and search for "Azure OpenAI Service".

“This request has exceeded your token limit.”

What it means:

The request contains too much data for the LLM to process. This typically means the prompt is too long or includes excessive data.

What to do:

-

Shorten your prompt.

-

Remove any unnecessary data points.

-

If your prompt includes additional data like Notes, try reducing the amount of data (e.g. number of notes) included.

“The response has exceeded your LLM's token limit and has been truncated.”

What it means:

The LLM’s response was too large, so the AI Assistant could not return it in full. You may receive an incomplete response.

What to do:

Reduce the length or complexity of your prompt to avoid overly large responses.

“The request has exceeded the max token limit. Historical conversation context or additional prompt data may have been truncated.”

What it means:

Too much data was included with the prompt (for example, Notes data). Some information was removed before being sent to the LLM, so the model may not have had the full context to generate an optimal response.

What to do:

Adjust the data included in the prompt via Prompt Studio. For example, reduce the number of recent Notes included or refine filters to exclude unnecessary data.

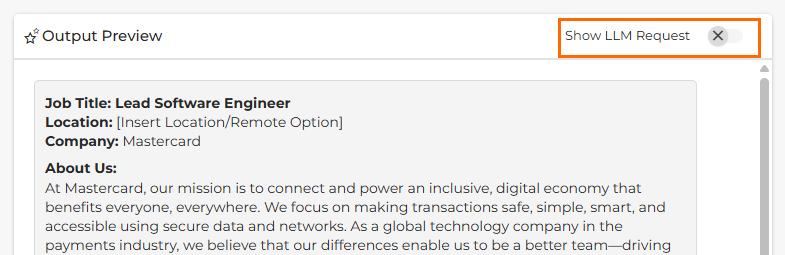

If you are an Admin and have access to the AI Assistant Prompt Studio, turn on Show LLM Request at the top of the output preview. This reveals exactly what is being sent to the LLM, making it easier to understand how prompts are processed and troubleshoot unexpected results.

“You have exceeded the usage limits of your LLM subscription. Please try again later or contact your Bullhorn Administrator”

What it means:

Your LLM subscription has reached its usage limits. Possible causes include:

-

Requests-per-minute cap exceeded

-

Overall request quota reached

-

Subscription payment issue

What to do:

-

If you are a User: Retry later. If the issue happens often, contact your Bullhorn Administrator.

-

If you are an Admin: Verify payment details, confirm you are on the correct subscription tier, and adjust or upgrade limits if needed.

Error Messages During Customer-Hosted LLM Configuration

If you host your own LLM through OpenAI or Azure, you may see these errors during setup.

Invalid or Incorrect Credentials

Error messages include:

-

“LLM Configuration is missing required data. Please contact your ATS Administrator”

-

“Incomplete LLM property configuration. Please contact your Bullhorn Administrator.”

-

“The apiKey provided is incorrect.”

-

“The modelName provided is incorrect.”

These errors point to invalid or incorrect authentication details, such as an API key, model name, or instance name.

If API Key is Invalid:

Common causes include:

-

There is a typo or an extra space in your API key.

-

You are using a revoked, deleted, or deactivated API key.

-

You are using an API key that belongs to a different organization or project.

-

Your API key lacks required permissions.

-

An old, revoked API key might be stored locally.

-

Clearing browser cache and cookies will help resolve this specific issue.

-

What to do:

-

Locate your API key:

-

OpenAI: You can find your API key in your Account Settings. Alternatively, go to General Settings and select your project.

-

Azure: You can find your API key in your Azure OpenAI portal, under Resource Management > Keys and Endpoint.

-

-

Verify that you’ve entered the correct API key in the API Key field in Bullhorn.

-

If unsure, generate a new API key and update Bullhorn with it.

If API Key or Model Name is Incorrect:

Reasons for an incorrect API key or model name can vary by provider:

-

OpenAI: Incorrect model name used.

-

Azure: Incorrect deployment name, instance name, or API version entered.

What to do:

-

OpenAI: Check that you have entered the correct Model Name in Bullhorn.

-

As of late 2025, we recommend “gpt-4.1-nano.” If in doubt, contact Support for the latest recommendation.

-

-

Azure: Check that you have entered the correct Deployment Name, Instance Name, and API Version in Bullhorn:

-

Deployment Name: You can find your deployment name in Azure under Resource Management > Model Deployments.

-

Instance Name: Make sure you are using the name set in Azure under Instance Details > Name.

-

API Version: Make sure this is set to 2023-05-15 in Bullhorn. (Bullhorn always uses the latest version on the backend, but this specific value is required for Azure as of 2025.)

-

“The following fields may contain invalid characters:”

What it means:

This error can appear during Azure LLM setup and usually indicates that an invalid character was entered in the URL field.

What to do:

Remove any special characters from the URL field and try again.