Amplify Prompt Studio

The Amplify Prompt Studio allows you to create and manage saved prompts for use with Amplify Assistant, Amplify Chat, and Amplify Enrich.

You can choose from standard prompt templates or create your own prompts from scratch using a free-text field.

Prompts created in the studio are shared across your organization, making it a great way to standardize workflows and improve consistency.

Creating a Prompt

-

Go to Menu > Admin > Amplify Admin.

-

Click the Prompts tab.

-

Select Add Prompt in the top right corner to create a new prompt.

-

To edit an existing prompt, click the pencil icon next to the desired prompt.

-

-

Complete each section as described below:

In this section, define where your prompt will be used, which entity it applies to, and whether it is enabled.

Entity

Select the primary entity the prompt will use. The entity determines which data fields the LLM![]() An LLM, or Large Language Model, is a deep learning model that has been pre-trained on vast amounts of data and is used to power generative AI. can reference, and which entities the prompt will be available on when using record-level Amplify Assistant.

An LLM, or Large Language Model, is a deep learning model that has been pre-trained on vast amounts of data and is used to power generative AI. can reference, and which entities the prompt will be available on when using record-level Amplify Assistant.

Options include:

-

Candidate

-

Job

-

Contact

Secondary Entity

Select a secondary entity to associate with your prompt. This allows users to include data from a related record.

For example:

-

A Candidate-based prompt may use Job as a secondary entity to reference details about a specific job.

-

A Job-based prompt may use Contact as a secondary entity to reference a related client contact.

When users select a secondary entity, the LLM uses data from both the primary and secondary entities to generate more tailored responses.

Secondary Entity Required

Enable this option if users must select a secondary entity before running the prompt.

If left unchecked, the secondary entity will be optional.

Location

Specify where the prompt will appear and be available:

- Amplify Card/Amplify Card - More Prompts: Adds the prompt to the Amplify Assistant and Amplify Chat prompt libraries.

-

Prompts with this location can also be used in the Enrich step of Bullhorn Automation.

- Automation: Makes the prompt available only in Bullhorn Automation’s Enrich step. These prompts will not appear in Amplify Assistant or Amplify Chat.

Prompt Enabled

Toggle Prompt Enabled to make the prompt available in the selected locations.

When disabled, the prompt remains saved but hidden from users.

This section defines what the prompt does and how it appears.

Role

Select the role of the person who will use this prompt. Roles are managed under the Data Management tab.

Task

Choose from predefined task prompts or select Custom Task to build your own.

If you choose Custom Task, click Add Task to open a free-text field where you can write the custom prompt. Use specific, directive language in custom prompts to guide the AI.

Label

Enter a clear and descriptive label for your prompt. This name appears in Amplify Assistant, Amplify Chat, and the Automation Enrich step.

Define default settings that control tone, length, language, and creativity.

-

Tone: Choose the desired tone, such as casual or formal.

-

Length: Set a default response length (e.g. short for text messages, longer for emails).

-

Language: Select the default response language.

-

Response Style: Choose the level of creativity you'd like the LLM to take when generating a prompt:

-

Precise: Consistent, factual responses. Best for job descriptions, candidate summaries, and data extraction.

-

Balanced: Mix of consistency and variety. Good for most use cases like outreach messages and interview questions.

-

Creative: Diverse, varied responses. Ideal for brainstorming, unique content, and exploring different angles.

-

In this section, define which data points can be included when generating a prompt and which should be included by default.

Selecting Data Points

Click in the search field to open the list of available data points. The available fields are determined by the Entity you selected in the Settings section. You can select nearly any field associated with that entity.

Confidential fields (where Confidential = true or null) and byte-type fields cannot be selected.

All data points you select become available in the Sources picker in Amplify Assistant and Amplify Chat. Users can choose which of these sources to send to the LLM when generating a response.

Default Data Points

Select the checkbox next to any field you want to include by default when this prompt runs. Users can adjust these defaults within the Sources picker when they use the prompt.

Related Entity Data

If you added a Secondary Entity, you can also select which data points to include from that related record.

Unlike the primary entity, related entity data points are always included by default and cannot be modified by the user.

Use this section to include related Notes, Emails, Submissions, or Placements in your prompt. This enables the LLM to use richer contextual information.

Each data type includes options for how much and what kind of information to send to the LLM.

Notes Settings

If you enable Notes as additional data, configure:

-

Include Notes by Default: Automatically include notes each time the prompt runs, or leave them optional.

-

Note Actions Filter: Limit notes to specific action types (e.g., “Interview” or “Call”). If left blank, the most recent notes are included regardless of action.

-

Note Content Filter: Enter keywords that must appear in the note content.

-

Number of Notes: Choose how many notes to include (1–10). Amplify will use the most recent notes that match your filters.

Emails Settings

If you enable Emails as additional data, configure:

-

Include Emails by Default: Automatically include emails each time the prompt runs, or leave them optional.

-

Email Subject Filter: Include only emails whose subject contains specific keywords.

-

Email Content Filter: Include only emails whose body contains specific keywords.

-

Number of Emails: Choose how many recent matching emails to include.

Submissions Settings

If you enable Submissions as additional data, configure:

-

Include Submissions by Default: Automatically include submissions every time the prompt runs, or leave them optional.

-

Number of Submissions: Choose how many recent submissions to include.

Placements Settings

-

Include Placements by Default: Automatically include placements every time the prompt runs, or leave them optional.

-

Number of Placements: Choose how many recent placements to include.

Use this section to define which actions are available within the Actions drop down once the prompt generates a response.

-

Add Note: Opens a new note with Amplify's response automatically filled in.

-

Send Email to Candidate: Opens the email compose window with Amplify's response as the body and the associated candidate as the recipient.

-

Send Email to Contact: Opens the email compose window with Amplify's response as the body and the associated contact as the recipient.

-

Update Primary Entity Field: Updates a selected field on the primary entity record with Amplify's response (e.g., automatically populate the Candidate’s Summary field).

-

When you've finished configuring your prompt, click Save.

Testing a Prompt

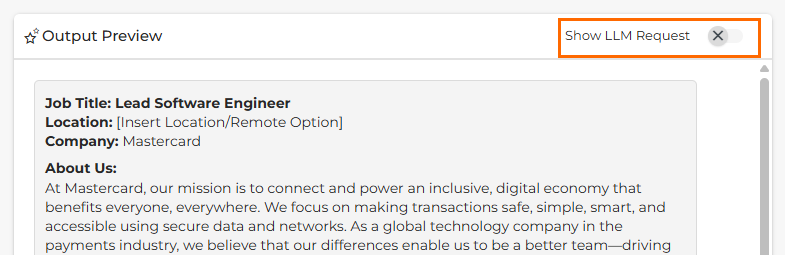

Once you've configured your prompt, we recommend testing with several records before enabling it to ensure it behaves as expected. The area on the right side of the screen allows you to preview and validate your prompt.

How to Test a Prompt:

- In the Select Entities to Preview search box, type to find a record to test the prompt with.

- Make sure you select a relevant record with good quality data.

- Make sure you select a relevant record with good quality data.

- Click Update to generate the prompt. The output will appear in the Output Preview area.

- To test the same prompt again, click Regenerate.

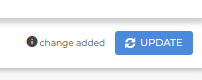

- You can continue to refine the prompt within the editor. After you make a change, "change added" will display and you can click Update to test again with the updated prompt.

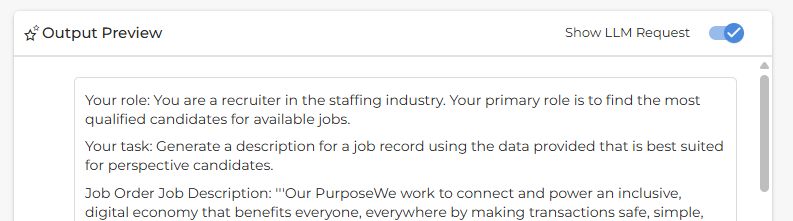

Show LLM Request

If you’d like to see the full details of what’s being sent to the LLM and the complete response it returns, toggle on Show LLM Request at the top of the output preview area.

This advanced view provides transparency into how the LLM processes your prompts. It’s especially useful for troubleshooting unexpected results and fine-tuning your prompts for better outcomes.